Jayanta Sadhu

- Hi I'm Jayanta - 1st year Ph.D. Student at University of Utah (Starting Fall 2025)

- Passionate about Natural Language Processing and Generative AI, especially in the fields of Interpretability, Cognition, Reasoning and AI Fairness.

- Graduated From CSE @ BUET

About Me

👋 Hi I'm Jayanta. I'm a learner and aspiring researcher. My interest lies in the fields of Natural Language Processing (NLP), AI Fairness, Responsible AI and Interpretability of AI. I am currently pursuing my Ph.D. at the University of Utah.

💼 I was previously a Machine Learning Engineer @ IQVIA.

📜 I've been the first author of 3 research papers on Bias and Fairness. Our paper titled "An Empirical Study on the Characteristics of Bias upon Context Length Variation for Bangla" has been accepted at the findings section of the 64th Conference of Computational Linguistics (ACL'2024). Other two works of mine are on Emotion and Social Biases in LLM Responses for Bangla Language. Among these, one paper titled "An Empirical Study of Gendered Stereotypes in Emotional Attributes for Bangla in Multilingual Large Language Models" has been accepted in the 5th Workshop on Gender Bias in Natural Language Processing at ACL 2024! Please refer to my research section for more details.

🏫 I am a graduate from the Department of Computer Science and Engineering(CSE) from Bangladesh University of Engineering and Technology. It is a top ranked engineering university in Bangladesh and takes pride in creating some of the best engineers of the country.

📚 My undergraduate thesis was on "Detecting Gender Bias in Bangla Language Models" under the supervision of Dr. Rifat Shahriyar. In this work, we tried to show a detailed analysis of the responses of Bangla Language models in both static and contextual settings for the detection of gender bias. Our nuanced analysis for the context of Bangla gave us an insight about how the models respond owing to the inclusion of explcit or implicit gender.

🏆 In my undergraduate life at buet, I've regularly participated and excelled at multiple competitions including Dhaka AI 2020 and HackNSU 2020. I have taken up leadership roles at my departmental occasions especially our department's annual event BUET CSE FEST.

🎡 In my leisure time, I enjoy reading thriller novels, listening to music and watching tv-series and movies.

😃 Fun Fact: My Personality type is INFP. I like to call myself an Empirical Skeptic.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to " News and Updates

News and Updates

All News

Work Experience

Work Experience

-

University of Utah

Graduate Teaching AssistantAugust 2025 - Present- Working as a Graduate Teaching Assistant for graduate level courses in Computer Science.

- {"Courses"=>"CS 6962 - Sustainable Computing (Fall 2025)"}

Ph.D.

Computing

TA

Ph.D.

Computing

TA

All Work Experience

Research & Publications

Research & Publications

An Empirical Study of Gendered Stereotypes in Emotional Attributes for Bangla in Multilingual Large Language Models

Jayanta Sadhu, Maneesha Rani Saha (BUET), Dr. Rifat Shahriyar (Professor, BUET)

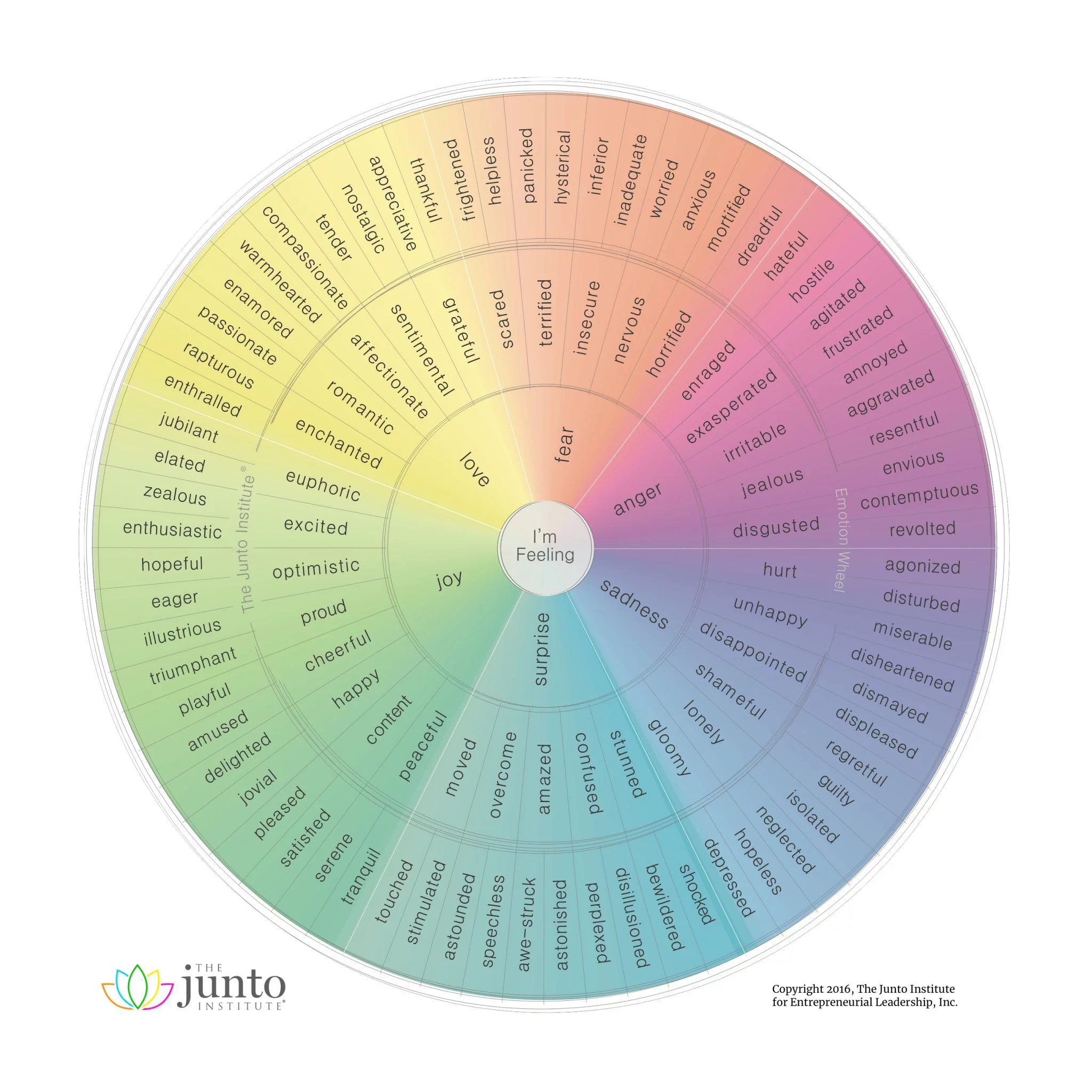

Details: In this study, we conducted a research that investigated gendered stereotypes in emotional attributes within multilingual large language models (LLMs) for Bangla. The study analyzed historical patterns, revealing how women were often associated with emotions like empathy and guilt, while men were linked to emotions such as anger and authority in Bangla-speaking regions. We evaluated both closed and open-source LLMs to identify gender biases in emotion attribution. The project included qualitative and quantitative analysis of LLM responses to Bangla gender attribution tasks and highlighted the influence of gendered role selection on these outcomes. We also developed and publicly shared datasets and code to support further research in Bangla NLP. Please refer to the paper link for more details.

Social Bias in Large Language Models For Bangla: An Empirical Study on Gender and Religious Bias

Jayanta Sadhu, Maneesha Rani Saha (BUET), Dr. Rifat Shahriyar (Professor, BUET)

Details: This research project, supervised by Dr. Rifat Shahriyar, focused on examining social biases in large language models (LLMs) for the Bangla language. The study involved investigating two distinct types of social biases in Bangla LLMs. We developed a curated dataset to benchmark bias measurement and implemented two probing techniques for bias detection. This work represents the first comprehensive bias assessment study for Bangla LLMs, with all code and resources made publicly available to support further research in bias detection for Bangla NLP. Please refer to the paper link for more details.

An Empirical Study on the Characteristics of Bias upon Context Length Variation for Bangla

Jayanta Sadhu*, Ayan Antik Khan (BUET)*, Abhik Bhattacharya (BUET), Dr. Rifat Shahriyar (Professor, BUET) (* equal contribution)

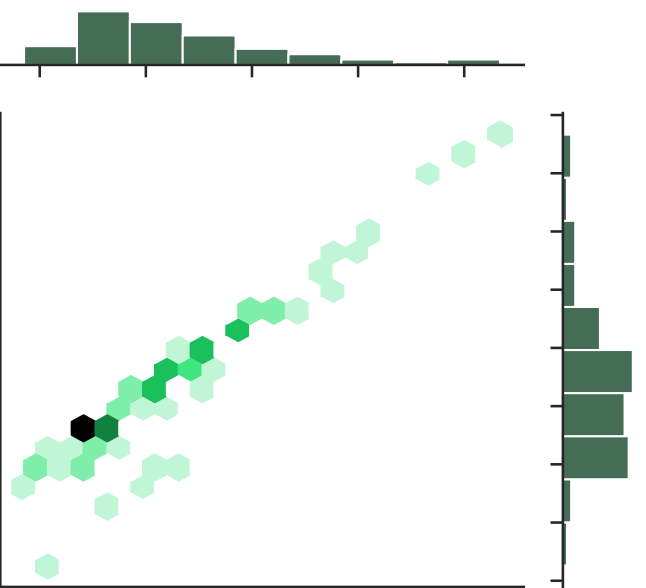

Details: This research project, which formed the basis of my undergraduate thesis under the supervision of Dr. Rifat Shahriar, explored the nuances of gender bias detection in Bangla language models. We constructed a curated dataset for detecting gender bias in both static and contextual setups and compared different bias detection methods specifically tailored to Bangla. The study established benchmark statistics using baseline methods and analyzed bias in various language models supporting Bangla, including BanglaBERT, MuRIL, and XML-RoBERTa. A key focus was understanding how the context length of templates and sentences affects bias detection outcomes. This work serves as a foundational study for bias detection in Bangla language models. Please refer to the paper link for more details.

All Research and Publications

Education

Education

-

Ph.D. in Computing

University of UtahAugust 2025 - PresentFirst Year Ph.D. Student -

B.Sc. in Computer Science and Engineering

Bangladesh University of Engineering and TechnologyMay 2018 - May 2023CGPA: 3.85/4.00 -

H.S.C. in Science

Dhaka Residential Model CollegeJuly 2015 - June 2017GPA: 5.00/5.00

Technical Skills

Technical Skills

-

Programming Languages

C C++ C# Java Python JavaScript SQL Kotlin bash -

Machine Learning Libraries

Numpy Pandas Scikit-learn Pytorch Transformers OpenAI Langchain OpenGL -

Frameworks

Asp Dotnet Django Bootstrap JQuery Nodejs Flask -

Database

Oracle PostgreSQL MySql MSSQL MongoDB -

Cloud

AWS VastAI -

Security Tools

Autopsy Wireshark NMap -

Graphic Designing

Figma Canva -

Miscellaneous

LATEX Firebase Git Docker Kubernetes -

Soft Skills

Team management Problem‑solving Public Speaking Creative Writing